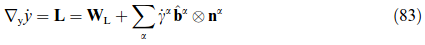

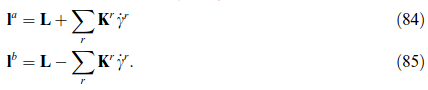

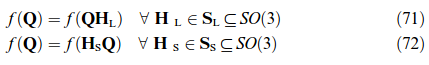

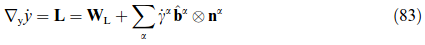

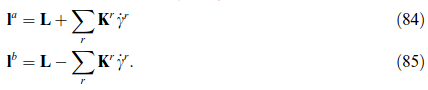

A certain drawback of the original LAMEL model consists in its restriction to deformation modes which are compatible with the presumed grain aspect ratio, e.g. pancake-like grains in rolling. This restriction is overcome by two recent models [20, 318] which focus on the boundary layer in between two neighboring grains. Both models apply a relaxation in the plane of the grain boundary (having normal n). The ALAMEL model, introduced by Van Houtte et al. [318], symmetrically relaxes two local velocity gradient components among the neighboring grains such that ∑r Kr = a ⊗ n with a ⊥ n. This is identical to the LAMEL case of pancake grains discussed above. As a result, stress equilibrium at the boundary is maintained except for the normal component [318]. The relaxation proposed by Evers et al. [20] is slightly different, as they, firstly, symmetrically relax the deformation gradient on both grains by ΔF = ±a ⊗ n, and secondly, determine the components of a by prescribing full stress equilibrium at the grain boundary, which is equivalent to a minimization of deformation energy. A real grain structure can then be mimicked by enclosing each grain with bicrystalline contacts towards its neighbors. The distribution of interface orientations reflects the (evolving) grain morphology thus decoupling grain shape from the deformation mode under consideration.

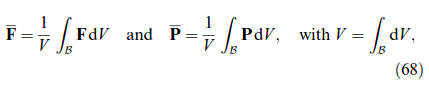

An extension of the mono-directional, thus anisotropic, two-grain stack considered in above mentioned LAMEL model to a tri-directional cluster of 2 × 2 × 2 hexahedral grains is due to Crumbach et al. [319] based on former work by Wagner [320]. In this scheme, termed grain interaction (GIA) model, the overall aggregate is subdivided into four two-grain stacks with the stacking direction aligned with the shortest grain direction j' and another set of four stacks with the stacking direction aligned with the second-shortest grain dimension j'' . Relaxation of Eij] (i =1, 2, 3) and Eij'']] (i ≠ j') is performed in the spirit of LAMEL, i.e., via mutually compensating shear contributions of both stacked grains, such that each two-grain stack fulfills the external boundary conditions—and in consequence the cluster as a whole. To maintain inter-grain compatibility—possibly violated by different strains in neighboring stacks—a density of geometrically necessary dislocations is introduced, which forms the basis for the evaluation of the mismatch penalty energy in equation (86). The profound progress of the GIA approach is that it connects the inter-grain misfit penalty measure to material quantities such as the Burgers vector, shear modulus, work-hardening behavior, and grain size. The GIA model formulation is compatible with arbitrary deformation modes and is, hence, not confined to plane strain. It was recently used in conjunction with a finite element solver where it served as a texture-dependent homogenization model [321].